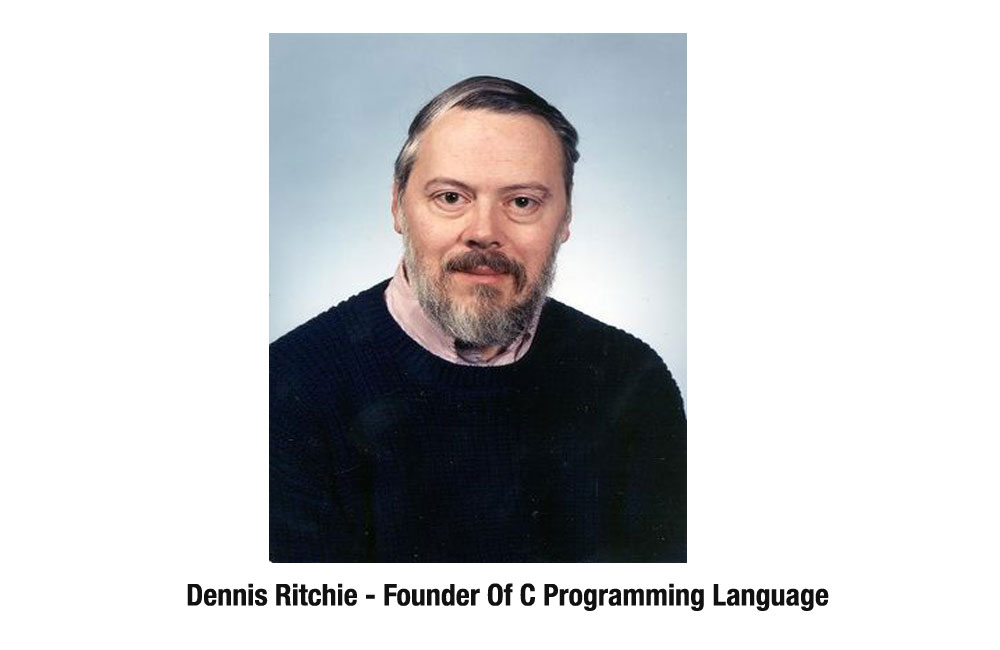

Inventor of the popular programming language, C, and co-inventor of the UNIX operating system, Dennis Ritchie (1941-2011) was a giant among software developers. The C language Ritchie invented in the early 1970s fundamentally changed the way in which software programmes are written. Today, C and its derivatives are widely used in virtually all devices and operating systems, whereas the UNIX and its versions are run on the world’s servers and data systems.

Ritchie worked at the Bell Labs for four decades. Yet the company did not support a project he and his colleague, Ken Thompson, were working on for time-sharing on the networks. The two men then decided to build a simpler system for smaller computing units called UNIX, which was designed for mainframe computers. Ritchie developed a still less complex programming language, called C. Moreover, the programme could run on different types of hardware.

With the advent of the Internet, Linus Torvalds, a Finnish software engineer reinvented UNIX but without any proprietary rights, paving the way for open source systems. Most modern software code is nowadays written using C’s more evolved descendants including C++, objective C (favoured by Apple) and C+ (espoused by Microsoft). The staple of the Digital Age, Java (which hosts several Internet applications), owes its origin to Ritchie. UNIX-like systems power millions of Apple and Android Mobile devices, Internet Service Providers’ server farms and over a billion gadgets.

Ritchie’s work became the basis of open source software. His famous textbook on the C programming with Brian Kernighan remains a classic. Steve Jobs, designer of the Apple computers, used a version of UNIX for all his Macs. No wonder one genius recognized another instantly. Ritchie was a mild-mannered, humble man. A physics undergraduate, who majored in applied Mathematics, he said he was not ‘smart’ enough to be a physicist. But the world continues to be enriched by his legacy.

Language C’s popularity was due to its user-friendliness. Its use did not involve punched cards or long wait for the results from machines. Just typing a command elicited the machine’s response. Moreover, timing of its release for public use was just right. It was when a new generation of smaller, cheaper and interactive mini computers was challenging the mainframes. The change was led by research groups and individuals.

C fundamentally changed programming. Using it one could work on different types of machines with the same programme. The impact of Ritchie and Thompson on the computing world and the Internet is indelible.

Software-defined Networking

Software will increasingly have a new role. It will be used to re-programme networking practices. To understand this we need to recall the pioneering technology of packet switching, which expedited data transmission in the Internet. When the Internet was designed in the 1960’s, it was mainly meant to transfer files and e-mail. Later years witnessed new applications not envisaged by the original founders: uploading videos (YouTube), net-based phone calls (Skype), streaming videos (Netflix) and networked video games (Zynga). But the basic infrastructure of the Internet has hardly changed. In the last decade, though the amount of personal data in the Internet has increased the hardware.

The reason is basic. The way in which data are transmitted across the Internet has remained the same since the early days of packet switching. The routers and switches, which were the basic components of the Internet, determine how the data travel Observers found that the data flow is not efficient but they could not do anything about it as the hardware is owned by private companies, which were reluctant to allow any tinkering with the switches and routers. Testing new ideas of transmission was nearly impossible, as the entire Internet, which has to be the test-bed for such experiments, continued to be in operational use.

This situation started to change in recent times. Some researchers decided to take a second look at packet switching. A group of computer scientists headed by Nik McKeown of the Stanford University developed a standard called open Flow that allowed researchers to define new wars of data flows using their software in experiments on switches and routers in college campuses. It was known as software-defined networking, developed by Stanford and the University of California, Berkeley. It enabled the separation of intelligence (called control path) from the fast packet forwarding (called data path) inside a network.

The new software gave access to the flow tables (rule that tell switches and routers how to direct the network traffic) and gave new solution through software to improve the data flow, without affecting the proprietary routing instructions that are unique to hardware configurations of different companies. An optimal network map is created and the most efficient route is chosen. Researchers found that programmable networks could stream high-definition videos more smoothly, reduce energy consumption in data centres and even attack computer viruses. A non-profit organisation called Open Networking Foundation has been set up to encourage research in this area and let programmers take control of computer networks. As many as 23 leading Internet companies formed the Foundation to encourage programmers to write the software needed for reprogramming networks.

In another innovation, known as Code On, a change in the transmission of packets will produce high-quality streaming video over poor network links. In the technique, each packet is converted into an algebraic equation and the original packet is recreated from the formula’s parts. Missing packets are reconstructed from neighbouring ones. The process increases the data load but the enhance redundancy helps trace all the packets transmitted at the receiving end. Again the degree of redundancy is flexible depending on the quality of the network. The merit of the innovation lies in the fact that it does not call for any hardware change in the Internet. When it becomes too much for the Code On to trace the missing packets, the system simply lets the normal TCP to handle it at its own pace.

Cloud computing demands a review of the traditional packet switching method. In the original format, intelligence was meant to largely reside at the end points of the network viz. computers. The routing computers simply read the address and forwarded the next node. The contents were not looked into en route. In cloud computing, intelligence to control the flow is centralised. The new approach would allow on-demand express lanes for voice and data traffic and let some companies combine several fibre optic cables in case of heavy traffic.

On Second Matters

The sophistication of the software today was dramatically brought out recently when the world’s time-keeper added and extra second to 30 June (2012) to compensate for the Earth’s movement around the Sun. The leap second was added to the International Atomic Time to ensure that the Earth’s clocks stay in sync with solar time. Accordingly, the Coordinated Universal Time (UTC) is adjusted to set the 86401-second day.

Many computers use the Network Time Protocol to keep their clocks synchronized with the International Atomic Time. Though the move to add an extra second was announced early, several servers which run some versions of Java and Linux chocked is supporting websites as a result of the additional second. The websites affected included LinkedIn, Meetup, Foursquare, Yelp, StumbleUpon and Instagram. The servers have since been brought to normal working.

The demand for professionals keeps changing in accordance with the technological progress. We need appropriate syllabi for computer science and offer adequate incentives to prevent brain drain. Just as the demand for professionals for mainframe computers was high during 2002 to 2008, there is today a growing demand for expertise in cloud computing and mobile software for use in Android and similar applications. It is now suggested that teaching of computing shills should ideally begin with children. Neuroscience point out that the human brain is at its peak absorption level at the age of about 11 to 15.